Research Insights

Pushing the Boundaries of AI Memory: Galaxia’s 1 Billion-Character Leap

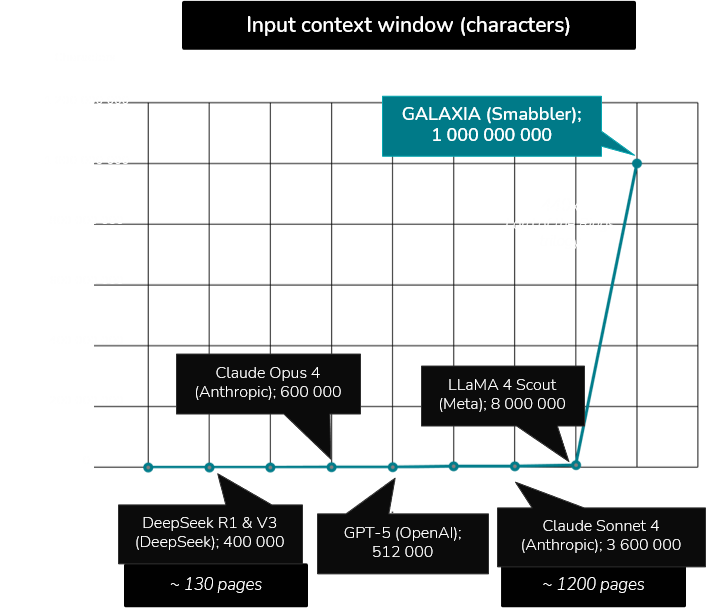

- Galaxia extends AI’s memory far beyond current limits - processing over 1 billion characters in a single pass, all on CPU/RAM.

- This breakthrough enables organizations to turn their entire knowledge base - thousands of documents or reports - into a live, conversational intelligence layer instantly, something that standard retrieval systems can’t achieve.

- It means teams can query, analyze, and reason across company-wide information using natural language - without retraining, complex pipelines, or data silos.

- For all their power, today’s large language models (LLMs) still operate within one critical constraint - their memory.

- Every model, from GPT-4o to Gemini or Claude, depends on a context window - the space in which it can “see” and reason at once.

- This context defines how coherently an AI can understand complex documents, sustain multi-step reasoning, or connect ideas over long chains of information.

- And yet, even state-of-the-art systems are bounded to hundreds of thousands - or at best, a few million - tokens. Beyond that, logic fades, context fragments, and explainability disappears.

The Limitation Behind the Models

- As LLMs process more text, their reasoning quality begins to degrade:

- Earlier context gets lost or diluted within the attention mechanism.

- Causal and semantic links weaken over longer passages.

- The computational cost rises exponentially, especially on GPU-heavy infrastructure.

- This makes LLMs fast and flexible - but shallow when it comes to persistence, memory, and auditability.

Enter Galaxia: Memory at Scale, Built for Understanding

- Galaxia introduces a semantic hypergraph memory system capable of analyzing over 1 billion characters in a single pass - equivalent to processing 500,000 pages or 440× the entire Lord of the Rings trilogy - on CPU/RAM, not GPUs.

- Instead of expanding transformers, Galaxia takes a different route:

- It structures knowledge as interconnected meaning graphs, enabling reasoning that remains explainable, persistent, and transparent across entire domains - from research archives to regulatory datasets.

- In practice, this changes what’s possible:

- From recall to reasoning - connecting information across thousands of documents in one coherent view.

- From short-term to long-term context - knowledge persists beyond a single interaction.

- From black-box output to transparent logic - every answer can be traced back to its source and reasoning path.

- From GPU dependence to sustainable AI - full-scale inference runs in-memory on efficient CPUs.

A Complement to, Not a Replacement for, LLMs

- Galaxia doesn’t compete with LLMs - it completes them.

- When paired together, the LLM handles language and generation, while Galaxia provides the structured memory and semantic continuity that enable reasoning over time.

- This hybrid architecture forms the foundation of Explainable Intelligence - systems that don’t just answer but can show why.